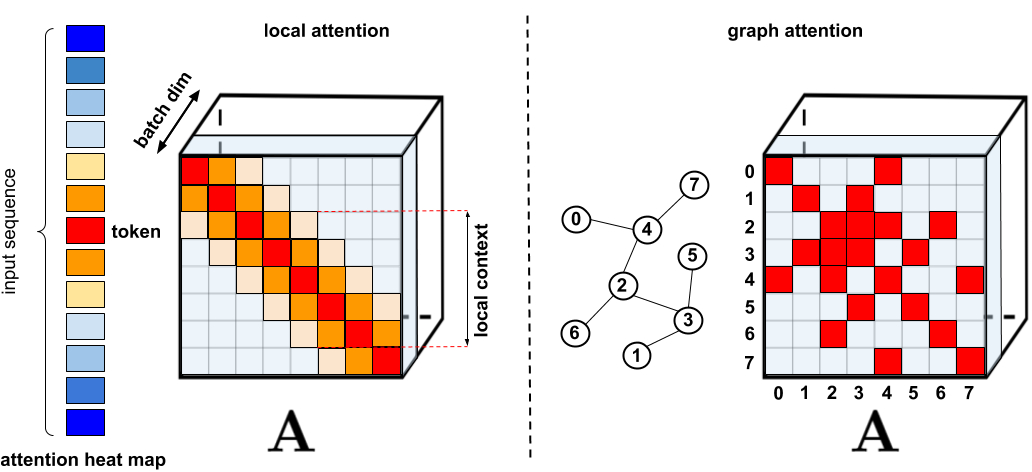

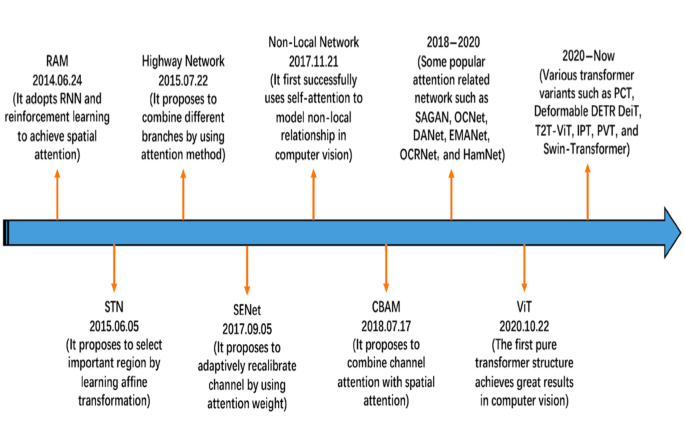

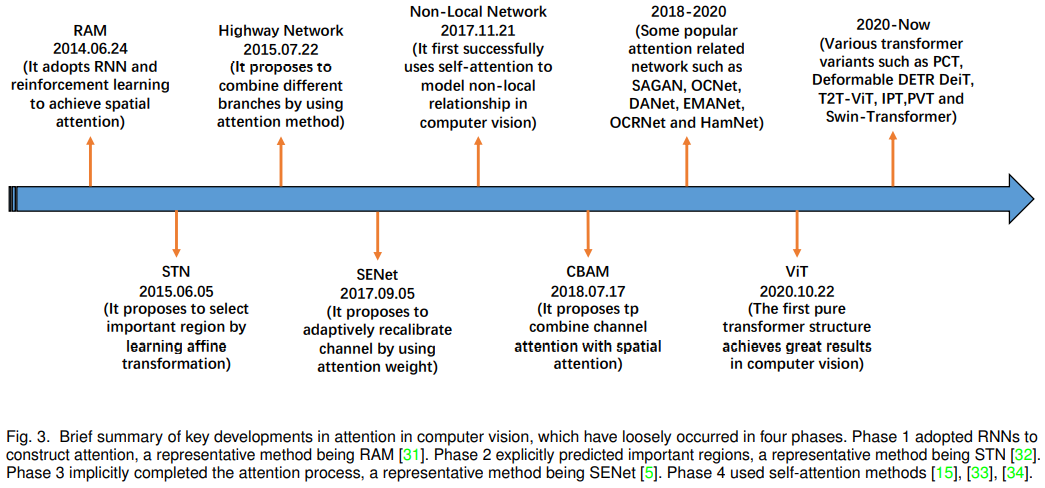

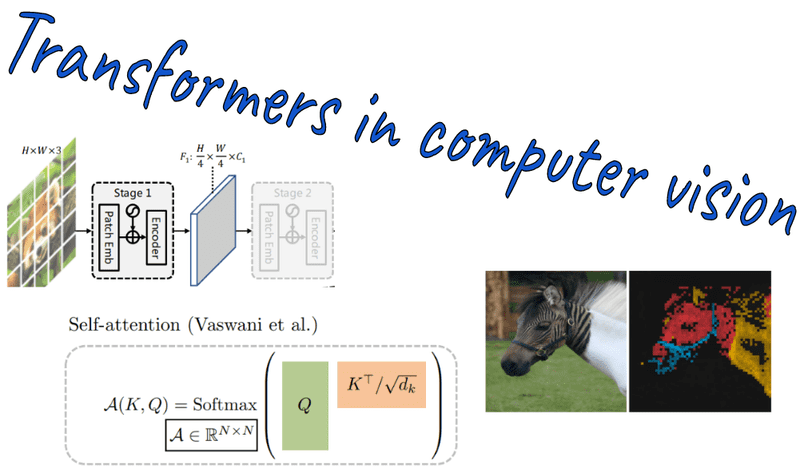

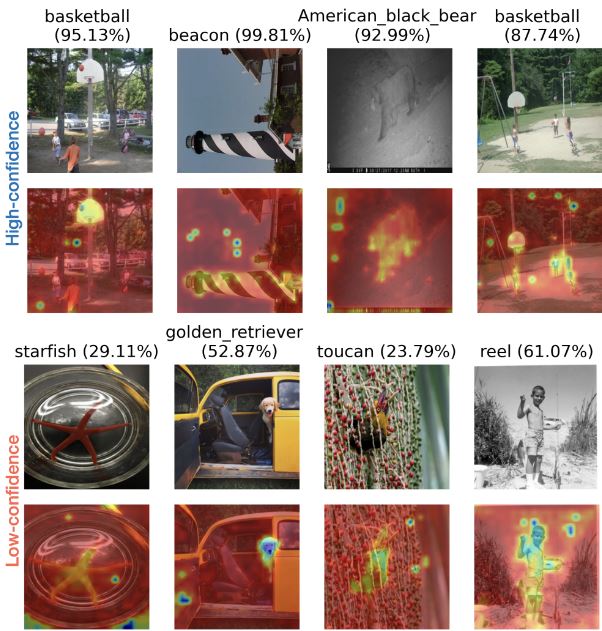

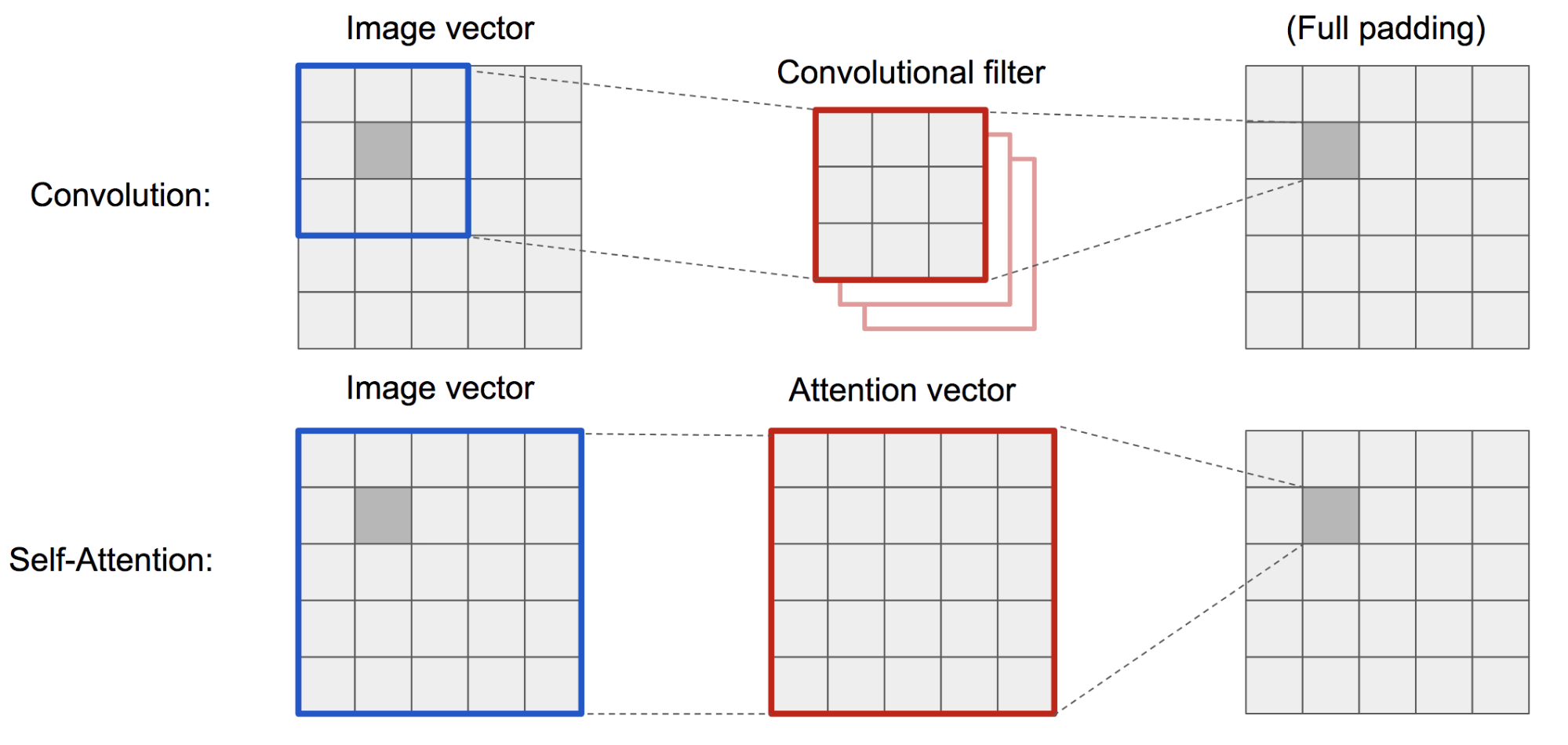

Chaitanya K. Joshi | @chaitjo@sigmoid.social on Twitter: "Exciting paper by Martin Jaggi's team (EPFL) on Self-attention/Transformers applied to Computer Vision: "A self-attention layer can perform convolution and often learns to do so

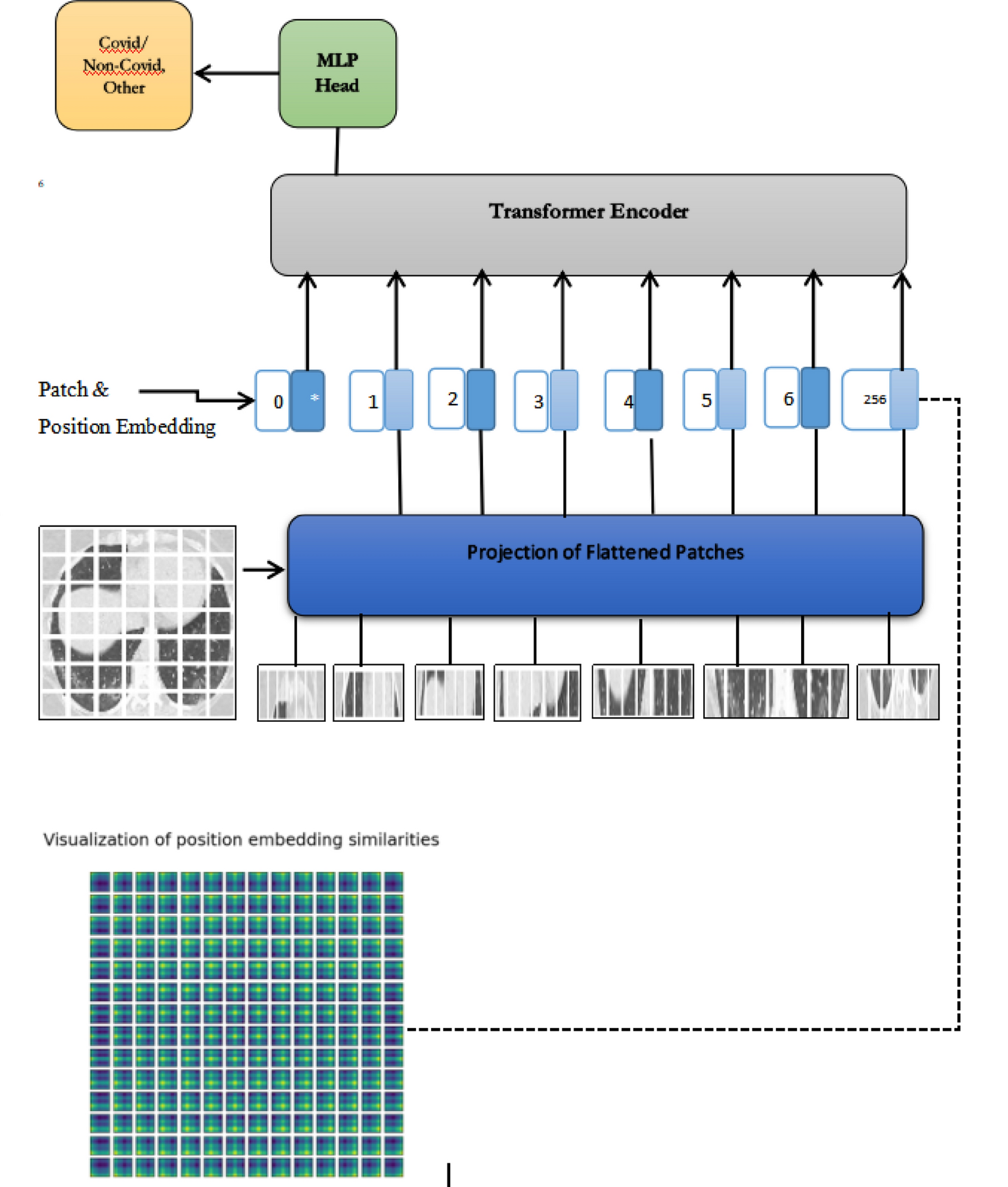

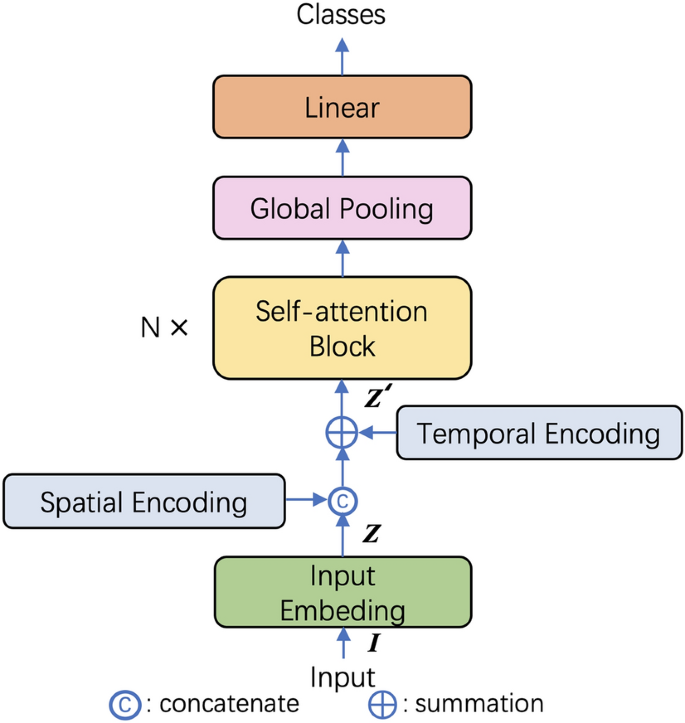

Vision Transformers: Natural Language Processing (NLP) Increases Efficiency and Model Generality | by James Montantes | Becoming Human: Artificial Intelligence Magazine

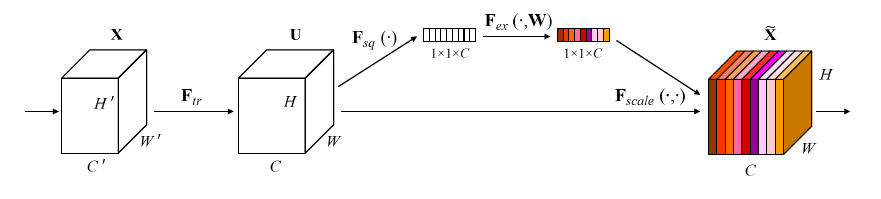

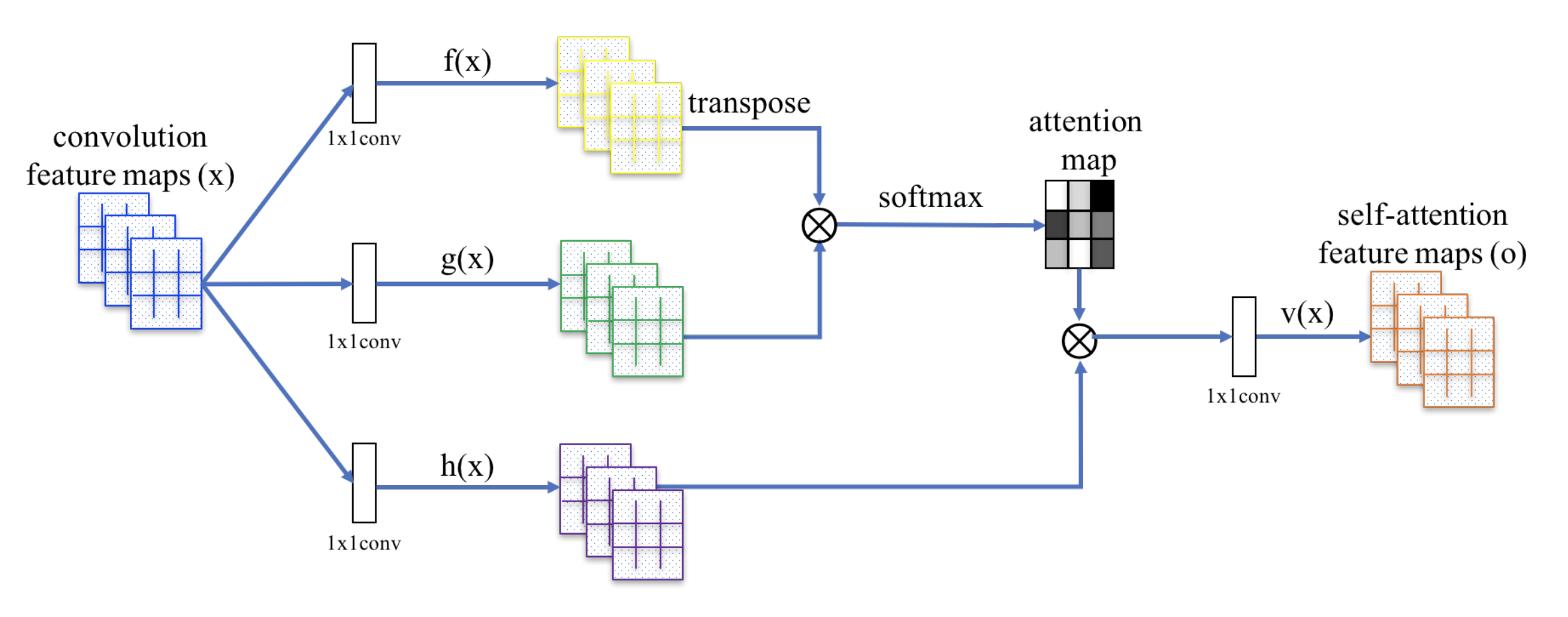

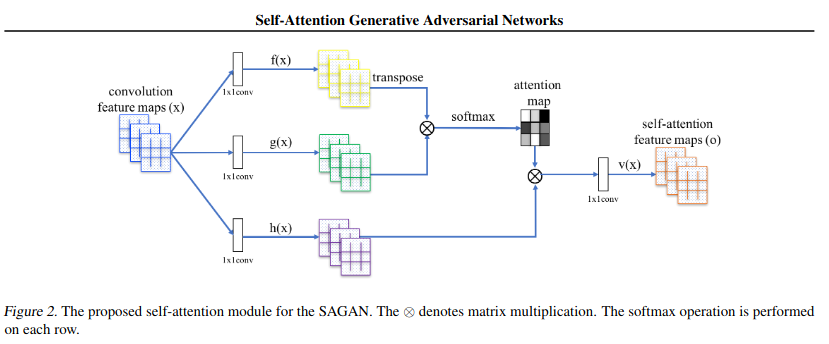

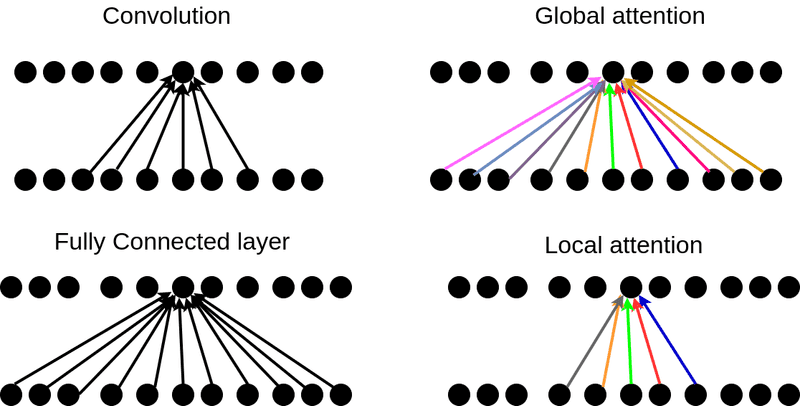

Tsinghua & NKU's Visual Attention Network Combines the Advantages of Convolution and Self-Attention, Achieves SOTA Performance on CV Tasks | Synced

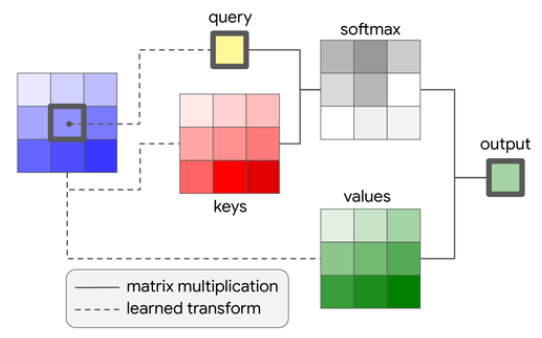

How Attention works in Deep Learning: understanding the attention mechanism in sequence models | AI Summer